搭建OpenStack多节点的企业私有云平台——基础环境搭建

参考书籍:OpenStack云平台部署与高可用实战 前期准备: 安装VMware workstation 15.5 下载CentOS-7-x86_64-DVD-1611.iso centos7.3最小化安装完成 电脑硬件配置:推荐RAM16G以上,使用SSD固态盘运行 实验环境:

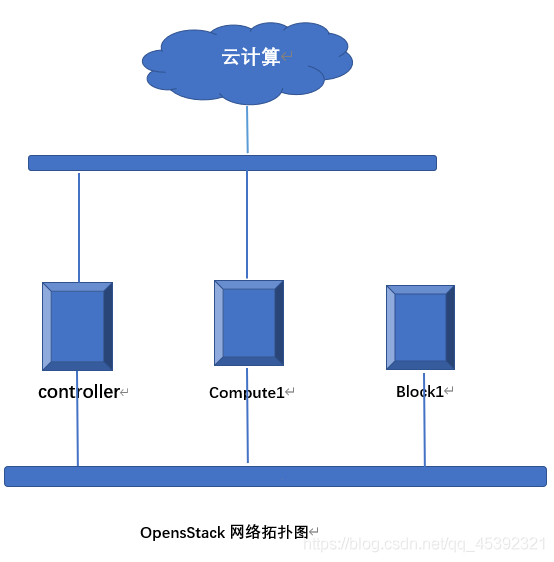

实验拓扑

安装环境用户名和密码

虚拟机操作系统的安装 centos7.3最小化安装,选择时区上海

项目实施过程

配置基础环境

1. 配置YUM源 所有节点均需要执行以下配置YUM源操作。以控制节点为例,执行命令如下: [root@controller ~]# yum clean all Loaded plugins: fastestmirror Cleaning repos: base extras updates Cleaning up everything [root@controller ~]# mkdir /etc/yum.repos.d/bak [root@controller yum.repos.d]# mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak/

[root@controller ~]# wget -O /etc/yum.repos.d/CentOS-repo http://mirrors.aliyun.com/repo/Centos-7.repo-bash: wget: command not found 执行yum install -y wget进行安装即可

- 执行命令结果如下

[root@controller ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

- 执行命令结果如下

[root@controller ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

- 执行命令结果如下

[root@controller ~]# sed -i ‘/aliyuncs/d’ /etc/yum.repos.d/CentOS-Base.repo [root@controller ~]# sed -i ‘/aliyuncs/d’ /etc/yum.repos.d/epel.repo [root@controller ~]# sed -i ‘s/$releasever/7/g’ /etc/yum.repos.d/CentOS-Base.repo [root@controller ~]# yum install -y vim * [root@controller ~]# vim /etc/yum.repos.d/ceph.repo [root@controller ~]# cat /etc/yum.repos.d/ceph.repo [ceph] name=ceph baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/x86_64/ gpgcheck=0 [ceph-noarch] name=cephnoarch baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch/ gpgcheck=0 2、配置主机域名解析 所有节点均需要配置hosts文件用来进行主机名解析,以控制节点为例: [root@controller ~]# vim /etc/hosts [root@controller ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.16.128 controller 192.168.16.129 compute1 192.168.16.34 block1 3、配置SSH免密码认证 所有节点之间都要配种密钥认证,以实现SSH免密码验证互联。以控制节点为例: [root@controller ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: e2:1a:38:91:83:b9:cb:11:11:a4:62:64:3a:5c:ce:dc root@controller The key’s randomart image is: ±-[ RSA 2048]----+ |.= . | |* * . | |=+ + E | |o+… | |o.+ . S | | …+ . . | |…o . . | |… o | |… . | ±----------------+ [root@controller ~]# ssh-copy-id controller The authenticity of host ‘controller (192.168.16.128)’ can’t be established. ECDSA key fingerprint is 5d:0d:f9:40:59:d2:f5:22:4d:45:7e:17:74:11:d5:68. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed – if you are prompted now it is to install the new keys root@controller’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘controller’” and check to make sure that only the key(s) you wanted were added. [root@controller ~]# ssh-copy-id compute1 The authenticity of host ‘compute1 (192.168.16.129)’ can’t be established. ECDSA key fingerprint is 5d:0d:f9:40:59:d2:f5:22:4d:45:7e:17:74:11:d5:68. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed – if you are prompted now it is to install the new keys root@compute1’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘compute1’” and check to make sure that only the key(s) you wanted were added. [root@controller ~]# ssh-copy-id block1 The authenticity of host ‘block1 (192.168.16.34)’ can’t be established. ECDSA key fingerprint is 5d:0d:f9:40:59:d2:f5:22:4d:45:7e:17:74:11:d5:68. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed – if you are prompted now it is to install the new keys root@block1’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘block1’” and check to make sure that only the key(s) you wanted were added. 4、关闭SELINUX和Firewalld服务 所有节点均要关闭SELINUX和firewalld服务。以控制节点为例,执行如下命令: 关闭firewalld防火墙服务并设置开机不启动 [root@controller ~]# systemctl stop firewalld [root@controller ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service. 关闭selinux安全内核模块设置 [root@controller ~]# setenforce 0 ——临时将selinux设置成permissive宽容模式,即只负责安全警告,不影响正常操作过程。 [root@controller ~]# vim /etc/selinux/config 将SELINUX=enforcing修改为SELINUX=disabled保存退出即可,重启。 [root@controller ~]# getenforce 0 ——使用此命令查看是否更改 disabled 5、配置时间同步 将controller节点作为时间源服务器,其本身的时间同步自互联网服务器。compute1、block1节点的时间从controller节点同步。 将controller节点配置为时间源服务器的步骤: (1)安装chrony组件 [root@controller ~]# yum install -y chrony (2)修改时间源服务器(即controller节点) 在/etc/chrony.conf配置文件里,注释掉默认的时间源服务器,添加阿里云时间源服务器 [root@controller ~]# vim /etc/chrony.conf [root@controller ~]# cat /etc/chrony.conf # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst server ntp6.aliyun.com iburst ——添加同步时间源地址 在配置文件中,允许其他节点同步controller节点的时间 [root@controller ~]# vim /etc/chrony.conf # Allow NTP client access from local network. allow 192.168.16.0/24 (3)启动chronyd服务 [root@controller ~]# systemctl restart chronyd.service [root@controller ~]# systemctl enable chronyd.service [root@controller ~]# chronyc sources ——查看时间同步信息 210 Number of sources = 1 **MS Name/IP address Stratum Poll Reach LastRx Last sample ===============================================================================** ^* 203.107.6.88 2 6 17 25 +47us[-2649us] +/- 59ms compute1与block1系欸DNA同步controller节点时间的操作方法相同。以compute1节点为例,执行以下操作: (1)安装chrony组件 [root@compute1 ~]# yum install -y chrony (2)修改时间源服务器的配置文件 [root@compute1 ~]# vim /etc/chrony.conf [root@compute1 ~]# vim /etc/chrony.conf [root@compute1 ~]# cat /etc/chrony.conf # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). #server 0.centos.pool.ntp.org iburst——注释掉默认的时间服务器 #server 1.centos.pool.ntp.org iburst——注释掉默认的时间服务器 #server 2.centos.pool.ntp.org iburst——注释掉默认的时间服务器 #server 3.centos.pool.ntp.org iburst——注释掉默认的时间服务器 server controller iburst ——添加controller节点为时间源服务器 (3)启动chrony服务 [root@compute1 ~]# systemctl restart chronyd.service [root@compute1 ~]# systemctl enable chronyd.service [root@compute1 ~]# chronyc sources ——查询节点时间是否同步,“*”代表同步成功。 210 Number of sources = 1 **MS Name/IP address Stratum Poll Reach LastRx Last sample ===============================================================================** ^* controller 3 6 17 14 -136us[-1580us] +/- 112ms block1节点同理。 6、在ocntroller和compute1节点安装相关软件 [root@controller ~]# yum install -y https://www.rdoproject.org/repos/rdo-release.rpm 【[root@controller ~]# yum install -y centos-release-openstack-ocata】 [root@controller ~]# sed -i ‘s/$contentdir/centos-7/g’ /etc/yum.repos.d/rdo-qemu-ev.repo [root@controller ~]# yum upgrade -y [root@controller ~]# yum install -y python-openstackclient [root@controller ~]# yum install -y openstack-selinux 7、MySQL数据库安装配置 在controller节点上执行以下操作 (1)安装MariaDB数据库 [root@controller ~]# yum install -y mariadb mariadb-server python2-PyMySQL (2)添加MySQL配置文件,并增加以下内容 [root@controller ~]# vim /etc/my.cnf.d/openstack.cnf [mysqld] bind-address=192.168.16.128 default-storage-engine=innodb innodb_file_per_table collation-server=utf8_general_ci character-set-server=utf8 在bind-address中,绑定controller节点来管理网络的IP地址 (3)启动MariaDB服务,并设置开机自启 [root@controller ~]# systemctl start mariadb.service [root@controller ~]# systemctl enable mariadb.service Created symlink from /etc/systemd/system/mysql.service to /usr/lib/systemd/system/mariadb.service. Created symlink from /etc/systemd/system/mysqld.service to /usr/lib/systemd/system/mariadb.service. Created symlink from /etc/systemd/system/multi-user.target.wants/mariadb.service to /usr/lib/systemd/system/mariadb.service. (4)执行MariaDB的安全陪自己脚本,在提示中设置root密码,统一设置成“000000” [root@controller ~]# mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we’ll need the current password for the root user. If you’ve just installed MariaDB, and you haven’t set the root password yet, the password will be blank, so you should just press enter here.

Enter current password for root (enter for none): OK, successfully used password, moving on…

Setting the root password ensures that nobody can log into the MariaDB root user without the proper authorisation.

Set root password? [Y/n] y New password: Re-enter new password: Password updated successfully! Reloading privilege tables… … Success!

By default, a MariaDB installation has an anonymous user, allowing anyone to log into MariaDB without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment.

Remove anonymous users? [Y/n] y … Success!

Normally, root should only be allowed to connect from ‘localhost’. This ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] n … skipping.

By default, MariaDB comes with a database named ‘test’ that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment.

Remove test database and access to it? [Y/n] y

- Dropping test database… … Success!

- Removing privileges on test database… … Success!

Reloading the privilege tables will ensure that all changes made so far will take effect immediately.

Reload privilege tables now? [Y/n] y … Success!

Cleaning up…

All done! If you’ve completed all of the above steps, your MariaDB installation should now be secure.

Thanks for using MariaDB! 8、RabbitMQ安装配置 在controller节点上执行以下操作 (1)安装RabbitMQ消息队列服务 [root@controller ~]# yum install -y rabbitmq-server (2)启动RabbitMQ服务,并设置开机自启 [root@controller ~]# systemctl start rabbitmq-server.service [root@controller ~]# systemctl enable rabbitmq-server.service Created symlink from /etc/systemd/system/multi-user.target.wants/rabbitmq-server.service to /usr/lib/systemd/system/rabbitmq-server.service. (3)创建消息队列用户openstack [root@controller ~]# rabbitmqctl add_user openstack 000000 //密码设置为000000 Creating user “openstack” (4)配置openstack用户的操作权限 [root@controller ~]# rabbitmqctl set_permissions openstack “." ".” “.*” Setting permissions for user “openstack” in vhost “/” 9、Memcached安装配置 在controller节点上安装配置Memcached服务 (1)安装服务 [root@controller ~]# yum install -y memcached python-memcached (2)修改配置文件 [root@controller ~]# vim /etc/sysconfig/memcached [root@controller ~]# vim /etc/sysconfig/memcached [root@controller ~]# cat /etc/sysconfig/memcached PORT=“11211” USER=“memcached” MAXCONN=“1024” CACHESIZE=“64” OPTIONS="-l 127.0.0.1,::1,controller" (3)启动服务 [root@controller ~]# systemctl start memcached.service [root@controller ~]# systemctl enable memcached.service Created symlink from /etc/systemd/system/multi-user.target.wants/memcached.service to /usr/lib/systemd/system/memcached.service. 至此,基础环境完成。