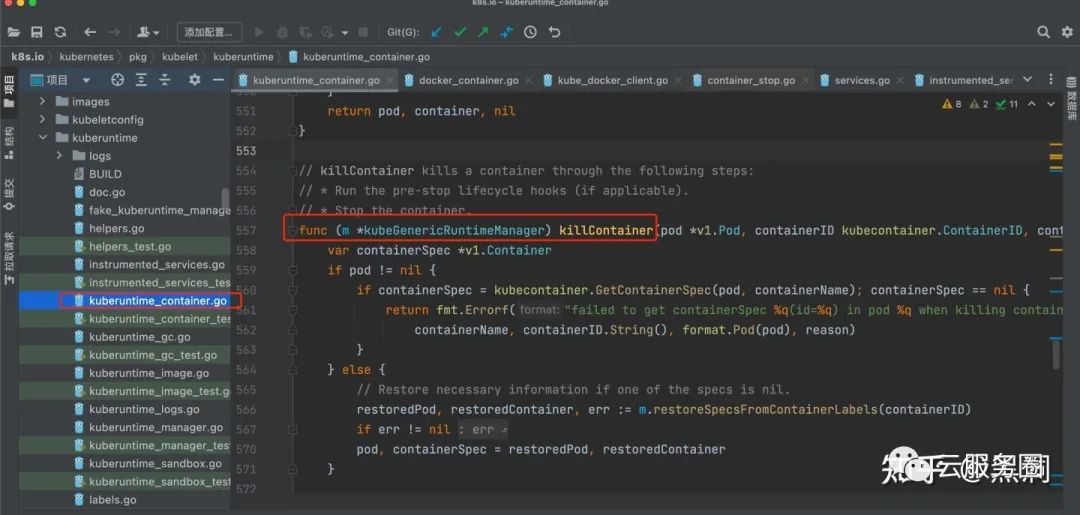

kubernetes/pkg/kubelet/kuberuntime/kuberuntime_container.go

m.killContainer --> m.internalLifecycle.PreStopContainer-->m.runtimeService.StopContainer

- for _, container := range runningPod.Containers {

go func(container *kubecontainer.Container) {

defer utilruntime.HandleCrash()

defer wg.Done()

killContainerResult := kubecontainer.NewSyncResult(kubecontainer.KillContainer, container.Name)

if err := m.killContainer(pod, container.ID, container.Name, "Need to kill Pod", gracePeriodOverride); err != nil {

killContainerResult.Fail(kubecontainer.ErrKillContainer, err.Error())

}

containerResults <- killContainerResult

}(container)

} - if err := m.internalLifecycle.PreStopContainer(containerID.ID); err != nil { return err }

- err := m.runtimeService.StopContainer(containerID.ID, gracePeriod)

// killContainersWithSyncResult kills all pod's containers with sync results.

func (m *kubeGenericRuntimeManager) killContainersWithSyncResult(pod *v1.Pod, runningPod kubecontainer.Pod, gracePeriodOverride *int64) (syncResults []*kubecontainer.SyncResult) {

containerResults := make(chan *kubecontainer.SyncResult, len(runningPod.Containers))

wg := sync.WaitGroup{}wg.Add(len(runningPod.Containers)) for _, container := range runningPod.Containers { go func(container *kubecontainer.Container) { defer utilruntime.HandleCrash() defer wg.Done() killContainerResult := kubecontainer.NewSyncResult(kubecontainer.KillContainer, container.Name) if err := m.killContainer(pod, container.ID, container.Name, "Need to kill Pod", gracePeriodOverride); err != nil { killContainerResult.Fail(kubecontainer.ErrKillContainer, err.Error()) } containerResults <- killContainerResult }(container) } wg.Wait() close(containerResults) for containerResult := range containerResults { syncResults = append(syncResults, containerResult) } return}

func (m *kubeGenericRuntimeManager) killContainer(pod *v1.Pod, containerID kubecontainer.ContainerID, containerName string, reason string, gracePeriodOverride *int64) error {

var containerSpec *v1.Container

if pod != nil {

if containerSpec = kubecontainer.GetContainerSpec(pod, containerName); containerSpec == nil {

return fmt.Errorf("failed to get containerSpec %q(id=%q) in pod %q when killing container for reason %q",

containerName, containerID.String(), format.Pod(pod), reason)

}

} else {

// Restore necessary information if one of the specs is nil.

restoredPod, restoredContainer, err := m.restoreSpecsFromContainerLabels(containerID)

if err != nil {

return err

}

pod, containerSpec = restoredPod, restoredContainer

}// From this point , pod and container must be non-nil. gracePeriod := int64(minimumGracePeriodInSeconds) switch { case pod.DeletionGracePeriodSeconds != nil: gracePeriod = *pod.DeletionGracePeriodSeconds case pod.Spec.TerminationGracePeriodSeconds != nil: gracePeriod = *pod.Spec.TerminationGracePeriodSeconds } glog.V(2).Infof("Killing container %q with %d second grace period", containerID.String(), gracePeriod) // Run internal pre-stop lifecycle hook if err := m.internalLifecycle.PreStopContainer(containerID.ID); err != nil { return err } // Run the pre-stop lifecycle hooks if applicable and if there is enough time to run it if containerSpec.Lifecycle != nil && containerSpec.Lifecycle.PreStop != nil && gracePeriod > 0 { gracePeriod = gracePeriod - m.executePreStopHook(pod, containerID, containerSpec, gracePeriod) } // always give containers a minimal shutdown window to avoid unnecessary SIGKILLs if gracePeriod < minimumGracePeriodInSeconds { gracePeriod = minimumGracePeriodInSeconds } if gracePeriodOverride != nil { gracePeriod = *gracePeriodOverride glog.V(3).Infof("Killing container %q, but using %d second grace period override", containerID, gracePeriod) } err := m.runtimeService.StopContainer(containerID.ID, gracePeriod) if err != nil { glog.Errorf("Container %q termination failed with gracePeriod %d: %v", containerID.String(), gracePeriod, err) } else { glog.V(3).Infof("Container %q exited normally", containerID.String()) } message := fmt.Sprintf("Killing container with id %s", containerID.String()) if reason != "" { message = fmt.Sprint(message, ":", reason) } m.recordContainerEvent(pod, containerSpec, containerID.ID, v1.EventTypeNormal, events.KillingContainer, message) m.containerRefManager.ClearRef(containerID) return err

}

kubernetes/pkg/kubelet/kuberuntime/kuberuntime_manager.go

// killPodWithSyncResult kills a runningPod and returns SyncResult.

// Note: The pod passed in could be nil when kubelet restarted.

func (m *kubeGenericRuntimeManager) killPodWithSyncResult(pod *v1.Pod, runningPod kubecontainer.Pod, gracePeriodOverride *int64) (result kubecontainer.PodSyncResult) {

killContainerResults := m.killContainersWithSyncResult(pod, runningPod, gracePeriodOverride)

for _, containerResult := range killContainerResults {

result.AddSyncResult(containerResult)

}// stop sandbox, the sandbox will be removed in GarbageCollect killSandboxResult := kubecontainer.NewSyncResult(kubecontainer.KillPodSandbox, runningPod.ID) result.AddSyncResult(killSandboxResult) // Stop all sandboxes belongs to same pod for _, podSandbox := range runningPod.Sandboxes { if err := m.runtimeService.StopPodSandbox(podSandbox.ID.ID); err != nil { killSandboxResult.Fail(kubecontainer.ErrKillPodSandbox, err.Error()) glog.Errorf("Failed to stop sandbox %q", podSandbox.ID) } } return

}

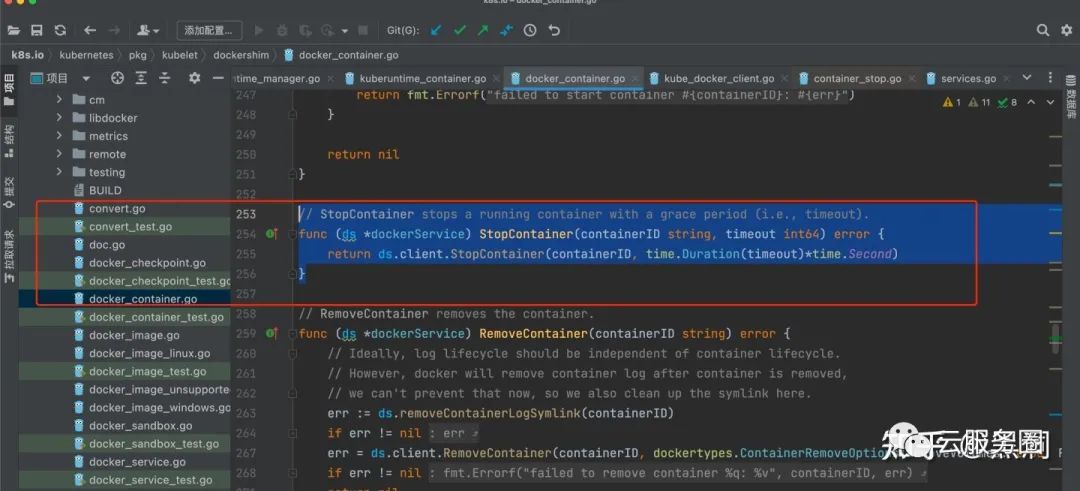

kubernetes/pkg/kubelet/dockershim/docker_container.go

// StopContainer stops a running container with a grace period (i.e., timeout).

func (ds *dockerService) StopContainer(containerID string, timeout int64) error {

return ds.client.StopContainer(containerID, time.Duration(timeout)*time.Second)

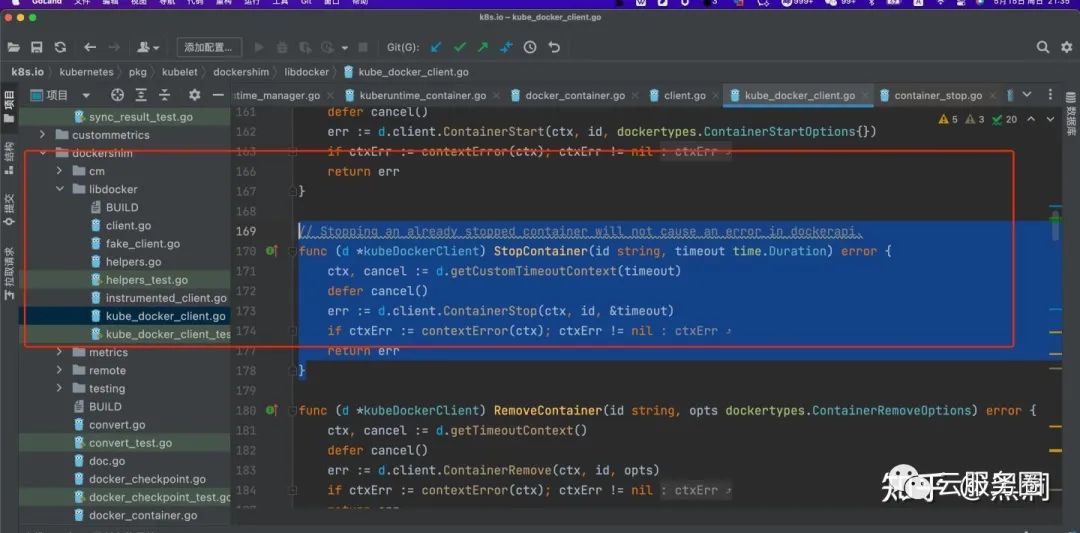

}kubernetes/pkg/kubelet/dockershim/libdocker/kube_docker_client.go

// Stopping an already stopped container will not cause an error in dockerapi.

func (d *kubeDockerClient) StopContainer(id string, timeout time.Duration) error {

ctx, cancel := d.getCustomTimeoutContext(timeout)

defer cancel()

err := d.client.ContainerStop(ctx, id, &timeout)

if ctxErr := contextError(ctx); ctxErr != nil {

return ctxErr

}

return err

}github.com/docker/docker/client/container_stop.go

// ContainerStop stops a container without terminating the process.

// The process is blocked until the container stops or the timeout expires.

func (cli *Client) ContainerStop(ctx context.Context, containerID string, timeout *time.Duration) error {

query := url.Values{}

if timeout != nil {

query.Set("t", timetypes.DurationToSecondsString(*timeout))

}

resp, err := cli.post(ctx, "/containers/"+containerID+"/stop", query, nil, nil)

ensureReaderClosed(resp)

return err

}

github.com/docker/docke

func runStop(dockerCli command.Cli, opts *stopOptions) error {

ctx := context.Background()var timeout *time.Duration if opts.timeChanged { timeoutValue := time.Duration(opts.time) * time.Second timeout = &timeoutValue } var errs []string errChan := parallelOperation(ctx, opts.containers, func(ctx context.Context, id string) error { return dockerCli.Client().ContainerStop(ctx, id, timeout) }) for _, container := range opts.containers { if err := <-errChan; err != nil { errs = append(errs, err.Error()) continue } fmt.Fprintln(dockerCli.Out(), container) } if len(errs) > 0 { return errors.New(strings.Join(errs, "\n")) } return nil

}

github.com/docker/docke

// containerStop sends a stop signal, waits, sends a kill signal.

func (daemon *Daemon) containerStop(container *containerpkg.Container, seconds int) error {

if !container.IsRunning() {

return nil

}stopSignal := container.StopSignal() // 1. Send a stop signal if err := daemon.killPossiblyDeadProcess(container, stopSignal); err != nil { // While normally we might "return err" here we're not going to // because if we can't stop the container by this point then // it's probably because it's already stopped. Meaning, between // the time of the IsRunning() call above and now it stopped. // Also, since the err return will be environment specific we can't // look for any particular (common) error that would indicate // that the process is already dead vs something else going wrong. // So, instead we'll give it up to 2 more seconds to complete and if // by that time the container is still running, then the error // we got is probably valid and so we force kill it. ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second) defer cancel() if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil { logrus.Infof("Container failed to stop after sending signal %d to the process, force killing", stopSignal) if err := daemon.killPossiblyDeadProcess(container, 9); err != nil { return err } } } // 2. Wait for the process to exit on its own ctx := context.Background() if seconds >= 0 { var cancel context.CancelFunc ctx, cancel = context.WithTimeout(ctx, time.Duration(seconds)*time.Second) defer cancel() } if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil { logrus.Infof("Container %v failed to exit within %d seconds of signal %d - using the force", container.ID, seconds, stopSignal) // 3. If it doesn't, then send SIGKILL if err := daemon.Kill(container); err != nil { // Wait without a timeout, ignore result. <-container.Wait(context.Background(), containerpkg.WaitConditionNotRunning) logrus.Warn(err) // Don't return error because we only care that container is stopped, not what function stopped it } } daemon.LogContainerEvent(container, "stop") return nil

}

github.com/docker/docke

// containerStop sends a stop signal, waits, sends a kill signal.

func (daemon *Daemon) containerStop(container *containerpkg.Container, seconds int) error {

if !container.IsRunning() {

return nil

}stopSignal := container.StopSignal() // 1. Send a stop signal if err := daemon.killPossiblyDeadProcess(container, stopSignal); err != nil { // While normally we might "return err" here we're not going to // because if we can't stop the container by this point then // it's probably because it's already stopped. Meaning, between // the time of the IsRunning() call above and now it stopped. // Also, since the err return will be environment specific we can't // look for any particular (common) error that would indicate // that the process is already dead vs something else going wrong. // So, instead we'll give it up to 2 more seconds to complete and if // by that time the container is still running, then the error // we got is probably valid and so we force kill it. ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second) defer cancel() if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil { logrus.Infof("Container failed to stop after sending signal %d to the process, force killing", stopSignal) if err := daemon.killPossiblyDeadProcess(container, 9); err != nil { return err } } } // 2. Wait for the process to exit on its own ctx := context.Background() if seconds >= 0 { var cancel context.CancelFunc ctx, cancel = context.WithTimeout(ctx, time.Duration(seconds)*time.Second) defer cancel() } if status := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning); status.Err() != nil { logrus.Infof("Container %v failed to exit within %d seconds of signal %d - using the force", container.ID, seconds, stopSignal) // 3. If it doesn't, then send SIGKILL if err := daemon.Kill(container); err != nil { // Wait without a timeout, ignore result. <-container.Wait(context.Background(), containerpkg.WaitConditionNotRunning) logrus.Warn(err) // Don't return error because we only care that container is stopped, not what function stopped it } } daemon.LogContainerEvent(container, "stop") return nil

}

docker-ce/kill.go at 523cf7e71252013fbb6a590be67a54b4a88c1dae · docker/docker-ce

// killPossibleDeadProcess is a wrapper around killSig() suppressing "no such process" error.

func (daemon *Daemon) killPossiblyDeadProcess(container *containerpkg.Container, sig int) error {

err := daemon.killWithSignal(container, sig)

if errdefs.IsNotFound(err) {

e := errNoSuchProcess{container.GetPID(), sig}

logrus.Debug(e)

return e

}

return err

}github.com/docker/docke

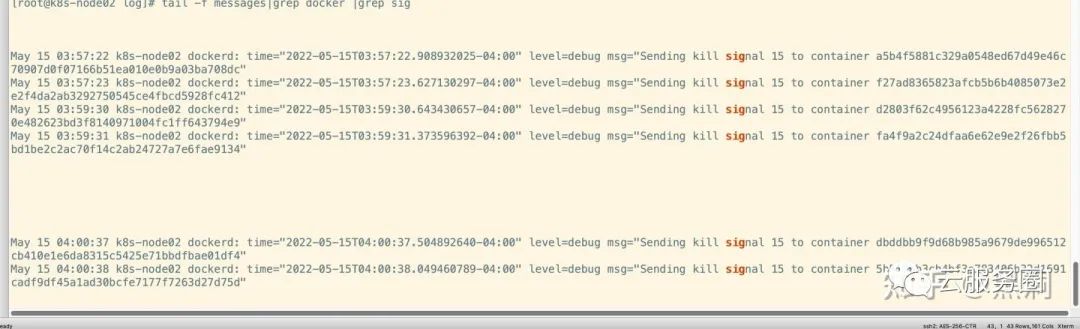

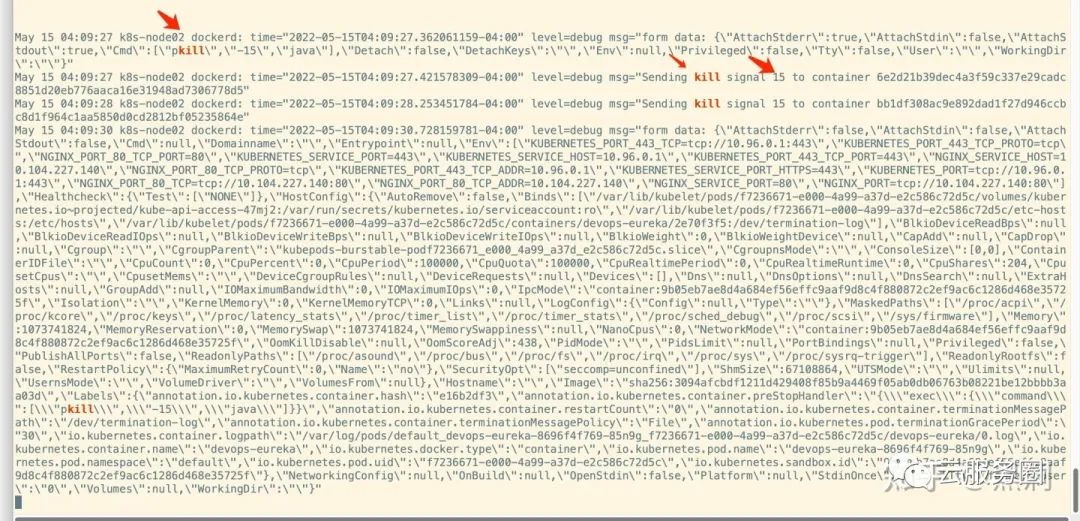

logrus.Debugf("Sending kill signal %d to container %s", sig, container.ID)

func (daemon *Daemon) killWithSignal(container *containerpkg.Container, sig int) error {

logrus.Debugf("Sending kill signal %d to container %s", sig, container.ID)

container.Lock()

defer container.Unlock()if !container.Running { return errNotRunning(container.ID) } var unpause bool if container.Config.StopSignal != "" && syscall.Signal(sig) != syscall.SIGKILL { containerStopSignal, err := signal.ParseSignal(container.Config.StopSignal) if err != nil { return err } if containerStopSignal == syscall.Signal(sig) { container.ExitOnNext() unpause = container.Paused } } else { container.ExitOnNext() unpause = container.Paused } if !daemon.IsShuttingDown() { container.HasBeenManuallyStopped = true container.CheckpointTo(daemon.containersReplica) } // if the container is currently restarting we do not need to send the signal // to the process. Telling the monitor that it should exit on its next event // loop is enough if container.Restarting { return nil } if err := daemon.kill(container, sig); err != nil { if errdefs.IsNotFound(err) { unpause = false logrus.WithError(err).WithField("container", container.ID).WithField("action", "kill").Debug("container kill failed because of 'container not found' or 'no such process'") go func() { // We need to clean up this container but it is possible there is a case where we hit here before the exit event is processed // but after it was fired off. // So let's wait the container's stop timeout amount of time to see if the event is eventually processed. // Doing this has the side effect that if no event was ever going to come we are waiting a a longer period of time uneccessarily. // But this prevents race conditions in processing the container. ctx, cancel := context.WithTimeout(context.TODO(), time.Duration(container.StopTimeout())*time.Second) defer cancel() s := <-container.Wait(ctx, containerpkg.WaitConditionNotRunning) if s.Err() != nil { daemon.handleContainerExit(container, nil) } }() } else { return errors.Wrapf(err, "Cannot kill container %s", container.ID) } } if unpause { // above kill signal will be sent once resume is finished if err := daemon.containerd.Resume(context.Background(), container.ID); err != nil { logrus.Warnf("Cannot unpause container %s: %s", container.ID, err) } } attributes := map[string]string{ "signal": fmt.Sprintf("%d", sig), } daemon.LogContainerEventWithAttributes(container, "kill", attributes) return nil

}

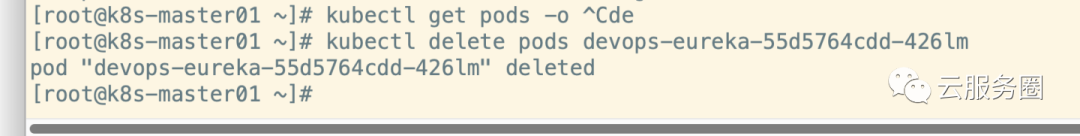

删除测试