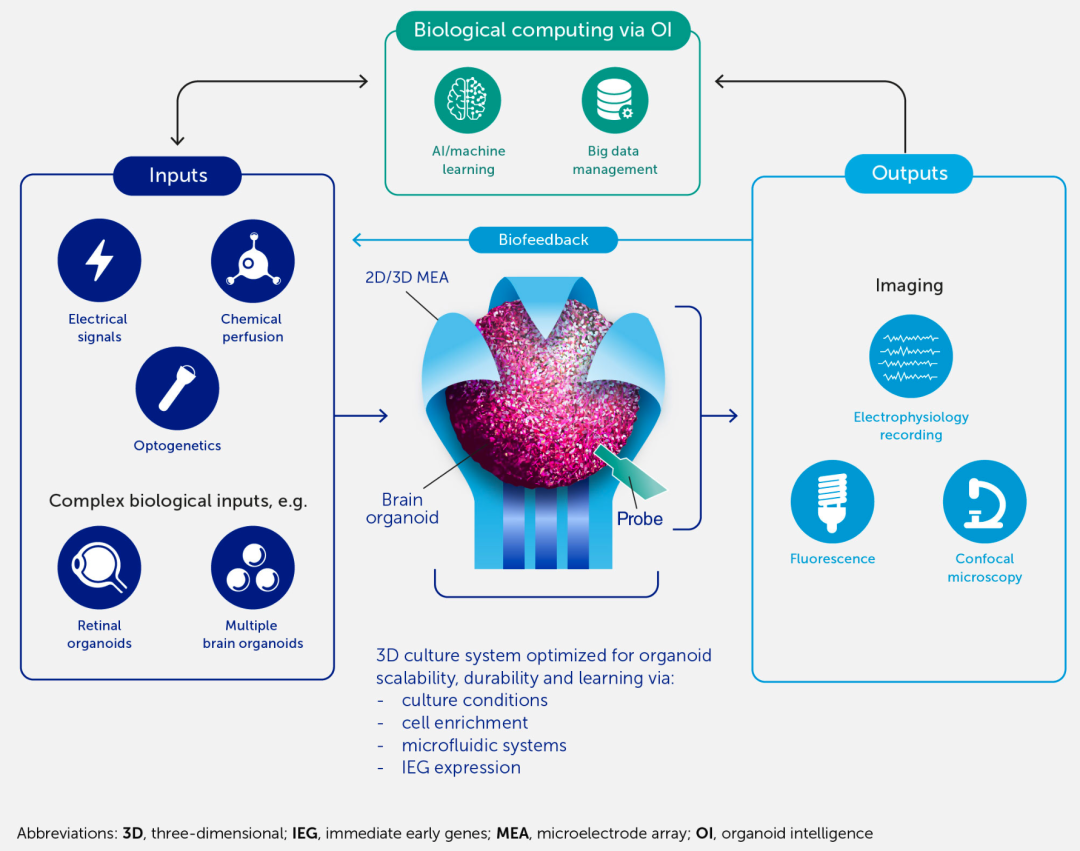

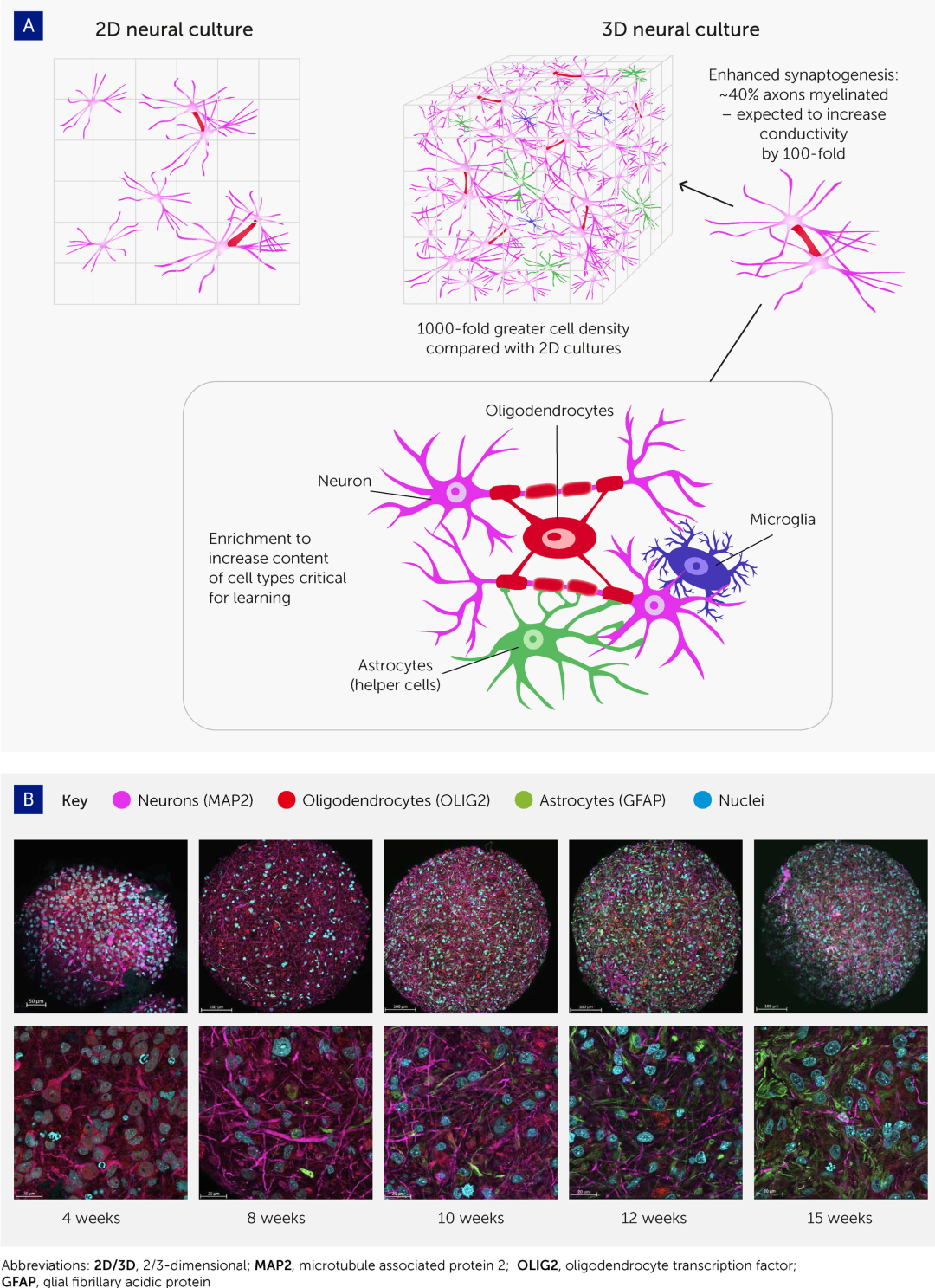

Recent advances in human stem cell-derived brain organoids promise to replicate critical molecular and cellular aspects of learning and memory and possibly aspects of cognition in vitro. Coining the term “organoid intelligence” (OI) to encompass these developments, we present a collaborative program to implement the vision of a multidisciplinary field of OI. This aims to establish OI as a form of genuine biological computing that harnesses brain organoids using scientific and bioengineering advances in an ethically responsible manner. Standardized, 3D, myelinated brain organoids can now be produced with high cell density and enriched levels of glial cells and gene expression critical for learning. Integrated microfluidic perfusion systems can support scalable and durable culturing, and spatiotemporal chemical signaling. Novel 3D microelectrode arrays permit high-resolution spatiotemporal electrophysiological signaling and recording to explore the capacity of brain organoids to recapitulate the molecular mechanisms of learning and memory formation and, ultimately, their computational potential. Technologies that could enable novel biocomputing models via stimulus-response training and organoid-computer interfaces are in development. We envisage complex, networked interfaces whereby brain organoids are connected with real-world sensors and output devices, and ultimately with each other and with sensory organ organoids (e.g. retinal organoids), and are trained using biofeedback, big-data warehousing, and machine learning methods. In parallel, we emphasize an embedded ethics approach to analyze the ethical aspects raised by OI research in an iterative, collaborative manner involving all relevant stakeholders. The many possible applications of this research urge the strategic development of OI as a scientific discipline. We anticipate OI-based biocomputing systems to allow faster decision-making, continuous learning during tasks, and greater energy and data efficiency. Furthermore, the development of “intelligence-in-a-dish” could help elucidate the pathophysiology of devastating developmental and degenerative diseases (such as dementia), potentially aiding the identification of novel therapeutic approaches to address major global unmet needs.

Key points

- Biological computing (or biocomputing) could be faster, more efficient, and more powerful than silicon-based computing and AI, and only require a fraction of the energy.

- ‘Organoid intelligence’ (OI) describes an emerging multidisciplinary field working to develop biological computing using 3D cultures of human brain cells (brain organoids) and brain-machine interface technologies.

- OI requires scaling up current brain organoids into complex, durable 3D structures enriched with cells and genes associated with learning, and connecting these to next-generation input and output devices and AI/machine learning systems.

- OI requires new models, algorithms, and interface technologies to communicate with brain organoids, understand how they learn and compute, and process and store the massive amounts of data they will generate.

- OI research could also improve our understanding of brain development, learning, and memory, potentially helping to find treatments for neurological disorders such as dementia.

- Ensuring OI develops in an ethically and socially responsive manner requires an ‘embedded ethics’ approach where interdisciplinary and representative teams of ethicists, researchers, and members of the public identify, discuss, and analyze ethical issues and feed these back to inform future research and work.

Introduction

Human brains are slower than machines at processing simple information, such as arithmetic, but they far surpass machines in processing complex information as brains deal better with few and/or uncertain data. Brains can perform both sequential and parallel processing (whereas computers can do only the former), and they outperform computers in decision-making on large, highly heterogeneous, and incomplete datasets and other challenging forms of processing. The processing power of the brain is illustrated by the observation that in 2013, the world’s fourth-largest computer took 40 minutes to model 1 second of 1% of a human’s brain activity (1). Moreover, each brain has a storage capacity estimated at 2,500 TB, based on its 86–100 billion neurons having more than 1015 connections (2, 3). In this article, we describe the emerging field that we term “organoid intelligence” (OI), which aims to leverage the extraordinary biological processing power of the brain.

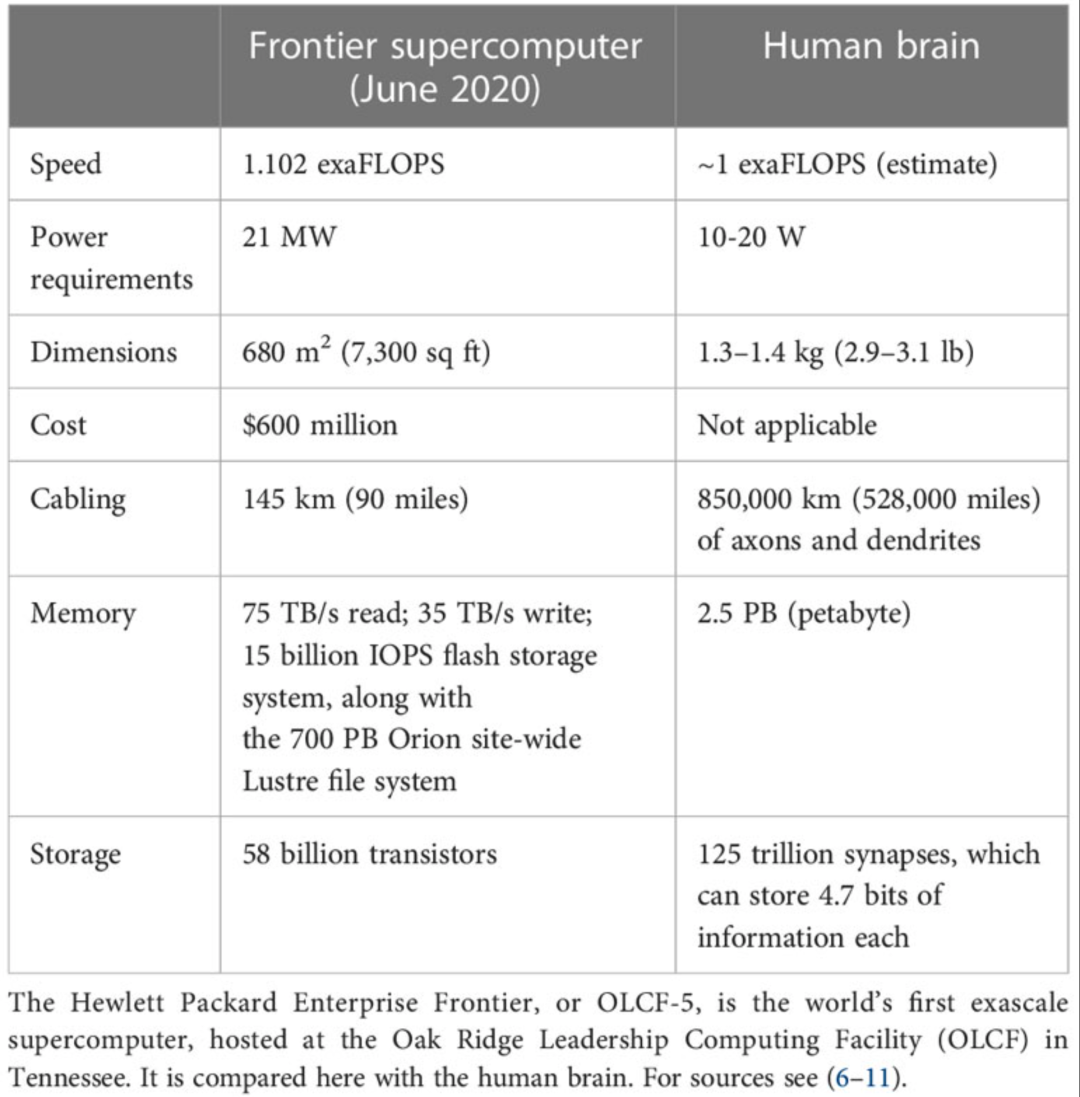

Superficially, both biological learning and machine learning/AI by an intelligent agent build internal representations of the world to improve their performance in conducting tasks. However, fundamental differences between biological and machine learning in the mechanisms of implementation and their goals result in two drastically different efficiencies. First, biological learning uses far less power to solve computational problems. For example, a larval zebrafish navigates the world to successfully hunt prey and avoid predators (4) using only 0.1 microwatts (5), while a human adult consumes 100 watts, of which brain consumption constitutes 20% (6, 7). In contrast, clusters used to master state-of-the-art machine learning models typically operate at around 106 watts. Since June 2022, the USA’s Frontier has been the world’s most powerful supercomputer, reaching 1102 petaFlops (1.102 exaFlops) on the LINPACK benchmarks. The power consumption of the new supercomputer is 21 megawatts, while the human brain operates at the estimated same 1 exaFlop and consumes only 20 watts (Table 1) (8–11). Thus, humans operate at a 106-fold better power efficiency relative to modern machines albeit while performing quite different tasks.

Second, biological learning uses fewer observations to learn how to solve problems. For example, humans learn a simple “same-versus-different” task using around 10 training samples (12); simpler organisms, such as honeybees, also need remarkably few samples (~102) (13). In contrast, in 2011, machines could not learn these distinctions even with 106 samples (14) and in 2018, 107 samples remained insufficient (15). Thus, in this sense, at least, humans operate at a >106 times better data efficiency than modern machines. The AlphaGo system, which beat the world champion at the complex game Go, offers a concrete illustration (16, 17). AlphaGo was trained on data from 160,000 games (17); a human playing for five hours/day would have to play continuously for more than 175 years to experience the same number of training games – indicating the far higher efficiency of the brain in this complex learning activity. The implication is that AI and machine-learning approaches have limited usefulness for tasks requiring real-time learning and dynamic actions in a changing environment. The power and efficiency advantages of biological computing over machine learning are multiplicative. If it takes the same amount of time per sample in a human or machine, then the total energy spent to learn a new task requires 1010 times more energy for the machine. AlphaGo was trained for 4 weeks using 50 graphics processing units (GPUs) (17), requiring approximately 4 ×1010 J of energy – about the same amount of energy required to sustain the metabolism of an active adult human for a decade. This high energy consumption prevents AI from achieving many aspirational goals, for example matching or exceeding human capabilities for complex tasks such as driving (18). Even large multinational corporations are beginning to reach the limits of machine learning owing to its inefficiencies (19), and the associated exponential increase in energy consumption is unsustainable (20), especially if technology companies are to adhere to their commitments to become carbon negative by 2030 (21, 22). At a national level, already in 2016 it took the equivalent of 34 coal-powered plants, each generating 500 megawatts, to meet the power demands of US-based data centers (23). Being much more energy efficient than current computers, human brains could theoretically meet the same US data storage capacity using only 1,600 kilowatts of energy. Notably, the power demands of any current or future implementation of OI is very different from the energy consumption of the human body, especially considering the energy footprint of modern cell culture relative to small organoids today. These comparisons of brains and computers serve only as illustrations of the high efficiency of the human brain.

完整内容请参考原论文。